AI Age Verification: How Facial Recognition Technology Ensures Regulatory Compliance

What AI-Powered Facial Age Verification Is

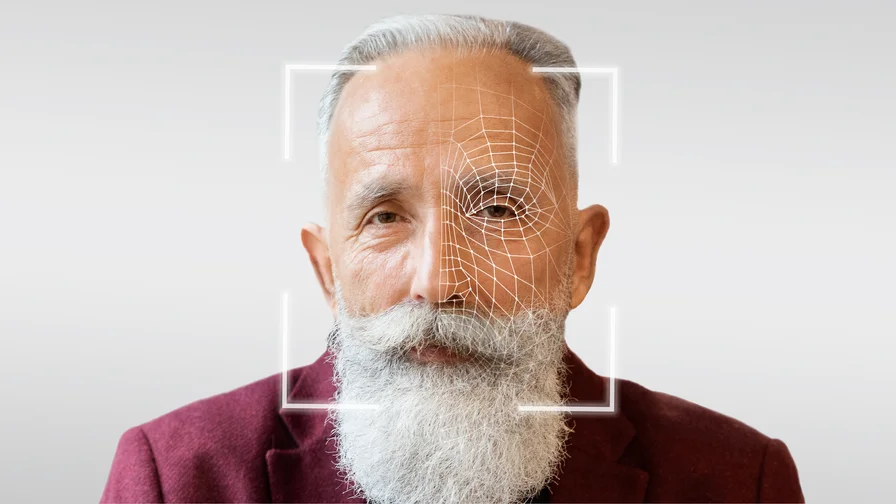

AI-powered facial age verification refers to automated age estimation using facial analysis, and it’s very different from traditional ID checks – or from “facial recognition” as most people understand it. With facial age estimation, a user simply takes a selfie or shows their face to the camera, and an AI model predicts their age range or confirms if they are above a required age threshold. The process begins with face detection, where the system distinguishes the human face from other objects in the image, operating in the background without user interaction. This unobtrusive operation means the technology often works seamlessly, with faces constantly on display in both virtual and real-life environments, which contributes to privacy risks. No name, address, ID number, or other personal identity information is needed. The system does not attempt to identify who the person is – it only evaluates their approximate age based on facial features. This means it’s not performing facial recognition in the surveillance sense (no face is compared against a database). Instead, it’s closer to an AI-powered camera check: the algorithm analyzes the face and outputs an age estimate, such as “over 18” or “under 18,” along with a confidence score indicating the certainty of the result.

Because it doesn’t require scanning official documents or creating an identity record, facial age verification is faster and far less intrusive than traditional methods. A user can complete a selfie age check in about one second on average. There’s no need to rummage for a driver’s license, type personal details, or wait for a human to review an ID. The AI instantly processes the facial image and returns just the needed result (for example, a yes/no answer on whether the age requirement is met). Crucially, privacy is built-in by design: modern solutions perform on-device or server-based analysis without retaining the selfie image or any biometric identifiers after the check. The photo a user provides is used only to calculate age and is then promptly deleted, typically within seconds. The platform implementing the check receives only an age result (e.g. confirmation the user is 18+) and never gains access to the person’s identity or the actual photo. In summary, AI facial age verification is a single-purpose, privacy-preserving tool – it answers “Are you old enough?” without collecting who you are. However, it is a fair question to ask how sensitive data is handled, as the use of AI for age verification raises significant privacy concerns due to the sensitive personal information required, such as government-issued IDs and facial images. The collection of such data can also create risks of biometric tracking across various contexts, raising privacy and security concerns.

This approach also contrasts with simple age gating (e.g. asking users to self-report age). Unlike a checkbox or birthdate field, which relies on the honor system, facial age estimation objectively verifies age characteristics without trusting user input. It provides a higher assurance level that regulators now increasingly expect, while avoiding the friction and data collection of full identity verification. By focusing purely on age, AI-powered verification strikes a balance: it’s quick and user-friendly for consumers, yet robust enough to satisfy compliance demands for accurate age checking. Still, there is a risk of mistake—AI age estimation tools may incorrectly classify users, leading to wrongful denial of access to content or account deactivation. This technology is especially important for platforms serving teens and young people, helping to ensure age-appropriate content and safety protections for these groups.

Facial recognition technology is used in a wide range of applications where fast, contactless identity verification is required.

How Facial Recognition Technology Estimates Age

Face recognition technology is used in a wide range of applications where fast, contactless identity verification is required, such as airports, banking, healthcare, and government services. AI age estimation technology works by analyzing facial features and patterns that correlate with age – essentially using computer vision and machine learning to perform what a human might try to do when guessing someone’s age, but with far greater consistency. When a user’s face is captured (via webcam or phone camera), the image is converted into a grid of pixels and numerical data. The technical process begins with face detection as the first step, followed by the use of facial recognition algorithms and face recognition software to analyze the unique features of the human face for identification and authentication. These AI models are trained on massive datasets of faces with known ages, enabling them to learn which visual features tend to indicate youth versus middle-age, for example. Critically, the ability of the system to perform accurately and fairly depends on the diversity and quality of the training data, which is collected from diverse populations – including different ethnicities, genders, and age groups – so that the system can recognize age cues broadly and minimize bias. (One leading provider notes its algorithm was trained on millions of anonymous face images from around the world, each tagged with the person’s birth date, to achieve high accuracy across demographics.)

Face recognition systems are deployed in various sectors to identify individuals, control access, and enhance security. For example, facial recognition can streamline patient registration in a healthcare facility, control access to patient records, detect fraud by uniquely identifying users creating a new account on an online platform, and authenticate banking transactions by recognizing a user's face instead of using passwords. In airports, face recognition technology can improve security and efficiency by allowing travelers to skip long lines and walk through automated terminals. Automated facial recognition is also used in law enforcement, security screening, and surveillance, raising ethical and legal concerns. These systems serve customers in both public and private sectors, including travelers, patients, and citizens, and are increasingly used to facilitate access to government services.

Modern facial age estimation models have made dramatic accuracy improvements in recent years. Independent evaluations by NIST (the U.S. National Institute of Standards and Technology) show that top algorithms can now estimate ages within roughly 2 years of the real age on average, across a wide range of subjects. For specific age brackets that are most critical (like distinguishing a 17-year-old from an 18-year-old), the precision is even higher. In fact, some cutting-edge systems report a mean absolute error below 1 year when estimating ages of teenagers (roughly 0.9 years error for ages 9–21). In practical terms, the AI’s guess is usually very close to the truth – often more consistent than human judgment. (Human staff, after all, can be very subjective in guessing age. Studies show people’s estimates of age are biased by factors like race and gender. Even in retail settings, “human judgement is often a weak link” – a tired or intimidated clerk might misjudge a customer’s age, leading to mistakes. AI doesn’t get fatigued or feel social pressure, so it applies the same criteria every time.)

To make a pass/fail decision, the system uses confidence scoring and age thresholds. Rather than outputting just an exact age guess, the AI often produces a probability distribution or confidence level. For example, it might determine there’s a 98% probability the user is an adult. The platform can set a decision threshold (say, 95% confidence of being 18+) above which the user is cleared as old enough. If the confidence is too low – for instance, the person looks very close to the cutoff age – the system may not immediately pass them. Instead, it could either fail safe (treat as underage) or prompt for a secondary verification method (like asking for an ID upload) to be sure. Many implementations also allow a “retry” – for example, if the first selfie was unclear or borderline, the user might try again, perhaps in better lighting or with a neutral expression, to get a more confident result. This approach ensures that the rare errors err on the side of caution, greatly reducing the chance that an actual minor slips through as an “adult.” In fact, one deployed system was found to be 99.97% accurate in correctly classifying adults vs. minors, thanks to such calibrated thresholds – a level of assurance that manual checks or self-reporting could never achieve. Recognition software provides a confidence score and can search databases to identify people or identify suspects in law enforcement and security contexts.

It’s worth noting that sophisticated age verification solutions also incorporate liveness detection and anti-spoofing measures as part of the age estimation process. Before trusting the age result, the system verifies that there is a real, live person in front of the camera – not a photo of someone older, a mask, or a deepfake video. Techniques like prompting the user to turn their head, analyzing lighting on the face, or using motion/3D depth sensors help confirm liveness. Only after the face is confirmed genuine does the AI analyze age. This prevents would-be evaders from fooling the check by holding up a picture of an older individual. With these combined technologies (liveness checks, advanced AI models, and smart thresholding), facial age verification can reliably estimate age in under a second of processing, and do so with a high degree of accuracy and security.

AI age estimation technology can also use machine learning to infer a user's age based on signals such as video search history and account longevity. However, the effectiveness of AI age estimation is often questioned due to its reliance on facial recognition algorithms, which can have high error rates and exhibit demographic biases, particularly for people of color and women. The accuracy of AI age estimation can vary significantly based on the quality of the training data used to develop the algorithms. The lack of a human oversight process in AI age verification can lead to wrongful denials of access to content for users. AI age verification can restrict access to content based on estimated age, impacting user experience on platforms like YouTube and Spotify, and platforms like Spotify may delete accounts if users do not comply with age verification processes, which can lead to frustration. Additionally, storing sensitive data such as government IDs and selfies on the internet introduces data security risks, emphasizing the importance of safeguarding personal data.

Ensuring Regulatory Compliance With AI Age Verification

Adopting AI-driven facial age verification can help businesses meet or exceed the requirements of emerging age-verification laws and standards. Regulators are increasingly signaling that such biometric age checks are an acceptable – even preferred – method for compliance when implemented with proper privacy safeguards. However, age verification processes often require users to submit sensitive personal information, such as government-issued IDs, raising additional privacy considerations. In the UK, for example, the communications regulator Ofcom has formally approved facial age estimation as a “high assurance” age-checking method for online safety compliance. This means that websites subject to the UK Online Safety Act can deploy AI age estimation to fulfill their duty of preventing underage access to adult content or other restricted services. Similarly, regulatory bodies in other countries have endorsed or certified these technologies. Germany’s digital youth protection regulators (KJM and FSM) have publicly endorsed at least one facial age verification solution for its effectiveness and privacy by design. And in the U.S., businesses have petitioned the FTC to recognize facial age estimation as an allowed tool under COPPA for obtaining parental consent, citing its strong performance in distinguishing adults from children. In short, global regulators now view robust age estimation AI as aligned with compliance obligations, as long as it’s used responsibly. Notably, organizations like the Electronic Frontier Foundation advocate for a ban on government use of facial recognition technology due to privacy concerns, reflecting ongoing debate about the technology’s role in regulation.

Crucially, AI age verification systems are built to support core privacy principles like those in GDPR, which most age-regulation frameworks insist upon. A well-designed facial age check embodies data minimization and purpose limitation: it gathers the minimum info necessary (a facial image solely to calculate age), uses it only for the specific purpose of age verification, and does not store the data afterward. This is far more privacy-preserving than collecting a trove of personal details or ID scans just to confirm age. The process can also be deployed in a “privacy by design” manner – for instance, doing the analysis on the user’s device or in a secure ephemeral session, so that no biometric data is retained by the platform. Privacy experts have expressed concerns that algorithmic face scans for age estimation can lead to inaccuracies and discrimination, emphasizing the need for strict safeguards and oversight. Compliance solutions often undergo independent audits or certification to ensure they meet standards like ISO 27566-1 for age assurance, which emphasize strong privacy controls and alignment with laws like GDPR and COPPA. By using an AI solution that has been third-party tested or certified (e.g., by NIST or an Age Check Certification Scheme), companies can demonstrate to regulators that their age verification process is both effective and compliant with data protection requirements.

Another compliance benefit of AI age verification is the built-in auditability and reporting it can offer. Every check performed can be logged (without storing any personal data) as a simple record that an age verification was completed for user X at time Y with result Z. These logs or aggregated reports provide evidence of due diligence. If regulators ask for proof that only adults are accessing a service, the platform can furnish stats like “We performed 5 million age checks last quarter and blocked 150,000 underage attempts,” all without ever exposing individual identities. The systems can also be configured to flag and record any failed or refused checks (for example, if someone declined to complete an age check), which is useful from an audit perspective. In essence, AI-driven solutions make it easier for compliance teams to show their work – they can demonstrate that a robust mechanism consistently enforces age rules, in line with legal obligations. This level of auditability, combined with privacy safeguards, positions AI age verification as a transparent and regulator-friendly approach to meeting the new age-restriction mandates. However, the implementation of AI age verification can lead to a migration of users to less regulated platforms if they find the verification process cumbersome, which is an important consideration for businesses and regulators alike.

Finally, deploying facial age estimation helps companies satisfy multi-jurisdictional requirements simultaneously. With regulators from the EU to Asia-Pacific all setting their own age assurance expectations, an adaptable AI solution allows a unified approach. The system’s threshold can be tuned to local age limits (e.g. 18 for adult content, or 16 for certain social media in some countries) and configured to comply with each region’s privacy laws. This flexibility means businesses can standardize their age verification workflow globally while easily adjusting parameters to stay within the law in each market. All told, leveraging AI-powered age verification not only keeps underage users out, but does so in a manner that proactively fulfills regulatory requirements, minimizes liability, and builds trust with authorities and consumers alike.

Privacy and User Trust: Addressing Common Concerns

One of the biggest concerns when using any facial analysis is privacy – and fortunately, facial age verification is specifically engineered to protect user privacy and build trust. First and foremost, this technology is not “facial recognition” in the traditional sense. It does not identify the person or compare them against any database of photos. As Instagram, which uses AI age estimation, explicitly tells its users: “The technology cannot recognize your identity – just your age.” This distinction is crucial. The system is only looking at physical age traits, not attempting to uniquely label or remember the individual. In addition, reputable providers ensure that no biometric identifiers are stored that could later be used to recognize someone. The facial image is processed on the fly for age and then promptly discarded. There’s no retention of the photo, faceprint, or any personal data, so the risk of a data breach or misuse of sensitive information is vastly reduced. In effect, the user remains anonymous – the platform gets an answer about age eligibility but doesn’t learn who the user is.

Being transparent with users and obtaining consent is another key part of the privacy-first design. Users should know when and why they’re being asked to do a face scan. In practice, digital platforms implement clear prompts like: “Please take a selfie. We’ll only use it to estimate your age and won’t store the image.” Explaining that the check is solely for age verification and that no identity will be revealed helps alleviate fears. Many users are understandably wary of facial scans, but when it’s made clear that this is not an identity check and nothing will be saved, they tend to be much more comfortable. For example, when given a choice between different verification methods, a significant majority of people (including parents verifying a child’s age) prefer the facial estimation option once they understand its privacy advantages. In one large-scale deployment, over 70% of parents chose a quick face scan over alternatives like providing ID or credit card info, when assured that the face image isn’t retained. This indicates that user trust increases when the process is transparent and minimalistic in data usage.

Designing the system with privacy in mind not only satisfies legal requirements (like GDPR’s principles), but also improves user experience and trust. A privacy-first approach means the process feels less invasive, which reduces user hesitation. Since the user does not have to hand over sensitive documents or personal details, they are less likely to abandon the signup or purchase. Companies have found that completion rates improve when using age estimation because users appreciate the convenience and confidentiality (no one is asking them to upload their passport or enter a credit card just to prove age). Additionally, because the AI check is typically instantaneous, it avoids prolonged waiting that might frustrate users. The speed and subtlety of the experience – often just a few seconds with a camera – can make the age gate almost seamless. All of this fosters a sense of trust: the platform demonstrates it’s serious about protecting minors but also respectful of user privacy. By clearly communicating how the technology works and safeguarding the data, companies can turn age verification from a point of friction into an experience that users accept and even appreciate as a responsible safety measure.

When Facial Age Verification Is the Right Choice

AI facial age verification is a versatile tool, but it’s important to understand the scenarios where it works best and where additional measures might complement it. The ideal use cases for facial age estimation are situations where you need to quickly and seamlessly verify that a customer is above a certain age without gating access with heavy identity checks. This includes content gating for adult or mature content (such as pornography websites or R-rated video streaming), where the primary goal is simply to keep under-18s out. It’s also well-suited for age-restricted purchases online – for example, ordering alcohol, tobacco, or vape products, as well as lottery tickets or online gambling access. E-commerce and delivery services can integrate a selfie age check at purchase or delivery to ensure the buyer is of legal age, instead of relying on couriers to check IDs at the door. Social media and gaming platforms are another key use case: many are now required to verify customer ages, especially if they have features that are unsafe for children. A facial age check during account sign-up or when accessing certain features (like open chat or 18+ content) can help these platforms comply with youth safety rules in a user-friendly way. Even dating apps and online communities that are meant for adults can deploy age estimation to prevent underage customers from joining. In all these cases, facial verification provides a fast, one-and-done screening that meets the need without creating big hurdles for customers.

However, it’s important to note that AI age verification systems often require customers to submit sensitive personal information, raising privacy concerns. Additionally, AI age verification can restrict access to content based on estimated age, which can impact customer experience on platforms like YouTube and Spotify if legitimate users are incorrectly denied access.

That said, there are scenarios where additional verification might be needed on top of facial age estimation. One such case is if the risk level or legal requirements demand a higher level of assurance about identity. For instance, certain financial services or gambling sites might need to verify not just age but also the person’s identity (KYC requirements) to comply with anti-fraud or anti-money-laundering laws. In those cases, a facial age check could be the first step (to ensure the customer is an adult) but it might be followed by an ID document check or database lookup for full identity verification. Another scenario is edge cases where the AI cannot confidently determine age. Most systems have a very high accuracy, but consider a customer who is, say, 17½ years old trying to pass as 18 – the AI might flag low confidence due to the closeness in age. Here, a fallback method should kick in: the customer could be asked to upload an ID or have a live video call verification to confirm age. Platforms often design a multi-step flow where the AI is the primary method due to its convenience, and then alternate methods are offered if the AI result is inconclusive or indicates the customer might be underage. For example, Microsoft’s Xbox now gives customers in certain regions a menu of verification options – they can choose to upload a government ID, use a selfie-based facial age estimation, verify via a mobile phone record, or even do a credit card check. If the facial estimation fails or isn’t chosen, another method can ensure no legitimate adult is turned away. This layered approach combines the low-friction benefits of AI with the backstop of traditional methods for the few cases where it’s needed.

It’s also worth considering situations like very young customers or privacy-sensitive contexts. Facial age estimation works for estimating if someone is, say, below 13 (the COPPA threshold) or below 18, but if a platform specifically targets children (e.g. a kids’ educational platform wanting to allow only under-13 customers), the technology might flag everyone who looks older than 13 – which is a different use case (ensuring someone is not too old rather than not too young!). In such cases, other methods (like parent verification) might be more appropriate. Additionally, for customers who don’t have access to a camera or have accessibility needs that make a face capture difficult, an alternative should be available – perhaps knowledge-based age quizzes or in-person verification. Fortunately, most platforms report that the vast majority of customers opt for and succeed with the selfie method when it’s offered, but providing a fallback ensures no customer is completely blocked due to technology constraints.

In summary, facial age verification is the right choice in most scenarios where quick, privacy-preserving, and accurate age checks are needed at scale. It shines in consumer-friendly applications where adding too much friction would hurt the business or customer experience. It is particularly powerful for high-volume platforms (social networks, online marketplaces, streaming and gaming services) and transactional contexts (e-commerce, digital content) where manual checks can’t keep up. However, if the AI age verification process is too cumbersome, there is a risk that customers may migrate to less regulated platforms to avoid the inconvenience. Businesses should still analyze their risk profile and compliance needs – in high-risk cases, they can deploy facial AI as part of a broader age assurance toolkit, combining it with document verification or other techniques when necessary. By intelligently routing customers through the least intrusive check first (the AI scan) and only escalating to heavier checks when required, companies can meet compliance obligations efficiently without alienating customers.

Conclusion: A Smarter Path to Age Compliance

Age verification is no longer a trivial checkbox in today’s regulatory environment – it’s a mandatory aspect of operating a digital platform responsibly. The good news is that AI-powered facial age estimation offers a smarter path to compliance, one that balances accuracy, privacy, and user experience. By leveraging advanced facial recognition technology (focused purely on age, not identity), businesses can protect minors from harmful content and services while maintaining user trust and minimizing friction. This approach has proven itself both in regulatory eyes – with authorities approving and even endorsing such solutions – and in real-world deployments scaling to millions of users. Companies that adopt facial age verification are finding that they can meet legal obligations in multiple jurisdictions simultaneously, future-proofing their operations against the evolving patchwork of age-restriction laws. They’re also discovering operational benefits: faster onboarding, lower costs, and higher completion rates, all while upholding strong privacy standards.

In essence, modern age verification is about achieving the right balance. Compliance demands precision – you must accurately distinguish adults from underage users – but it also demands respecting user rights and keeping the experience as seamless as possible. AI-powered solutions strike that balance by providing high assurance with low intrusion. They embody the principle that we can ask only what’s necessary (in this case, “Are you old enough?”) and nothing more. As a result, businesses can enforce rules without playing Big Brother and users can verify their age without feeling violated. Moving forward, as regulators continue to clamp down on underage access, those who have deployed these AI age checks will be a step ahead: they’ll be seen as proactive, responsible actors in their industry, rather than scrambling to catch up with compliance. In the words of one industry leader, “reliable age verification is no longer optional – it’s essential” for safeguarding users and staying on the right side of the law. AI-driven facial age verification provides an effective, privacy-conscious, and scalable solution to meet that essential need. It allows businesses to not only comply with confidence but also to create a safer digital environment where trust is maintained and the only people accessing age-restricted experiences are those who are truly old enough to do so.

Want to learn more?

Explore our other articles and stay up to date with the latest in age verification and compliance.

Browse all articles